How user research can reveal non user centric systems the ratings case

In the User Research Team at Cabify, we are quite proud of how we work. During the last two years, we have been building a work process to attend the needs of the company without neglecting the quality that we are looking for. Here, we want to share with you one of our latest projects where we took some decisions with the aim to get a deep knowledge before redesigning a key feature of the app.

In our point of view, there is no “one size fits all” when creating a research design. However, all user research projects are based on an underlying vision of how the researchers think it should be done. In our case, we do not just repeat what we did last time when we get a new request for research. Instead, we start a thinking process to define the scope of the research and, during this process, we are confronted with a series of choices where we can either extend or reduce the scope. While some choices are naturally limited by restrictions that do not depend on the researcher (time, budget, and availability), others depend on the researcher’s capacity to challenge the boundaries for the research. It is this capacity that will define the impact of the findings in the organization, and of course, for the users.

**We always try to dig as deep as possible **and we will show you an example of how we do it here:

Redefining the rating system at Cabify: a project with many possible approaches

A rating system has become a standard way for companies to get information about the service offered and to detect possible improvements. At Cabify we had a five-star system that allowed the user to do a rating after each trip. Although it was a very common system, less than 25% of the trips were rated, so the Product Manager in charge, Tania*, asked the User Research Team for help.

*You can read her experience with the project here.

Step 1: Defining our goal

When working as a researcher on a project like this, you can face the challenge in two different directions:

-

OPTION A — “OK, we can talk with the designer on the project, Marta, to get some new proposals to evaluate and see which one of them is the most attractive for the users.”

-

OPTION B — “Good, it would be necessary to understand how users perceive a rating system when using the service and what kind of things they want to communicate with it.”

We went for option B because it was totally relevant to take a step back and even question if a rating system provides value to the users. If we had reduced the scope to testing different design proposals it would have been harder to put the actual needs of the users in the center: the proposals would already be based on an assumption of what their need is. We could even wrongfully validate a proposal that nobody needs just by insisting on collecting their feedback for improvements. Even though option B would increase both time and resources for the project we knew it was going to be worth it: it would allow us to understand their context of use (or lack of the same) and expectations.

From this step, we were able to conclude a simple but very important aspect: our users consider a rating system essential to assure a high-quality service. They need its existence to feel that Cabify listens to their opinion, and they even compare it with the traditional taxi where they had no way to provide feedback and, in consequence, to improve the service.

Step 2: Defining the dimension

Once we had decided we preferred to go in-depth with the motivations of the users instead of testing a proposal, it was the moment to define exactly what we wanted to do:

-

OPTION A — “We can interview Cabify users to understand their needs and expectations for a rating system.”

-

OPTION B — “Or maybe we should also include our drivers in the research because the ratings could somehow affect their experience too.”

And what did we do? We included both our users and our drivers in the research because the rating of the users had a direct impact on the drivers so it was a must to have them participate. If we had only focused on our users we would not have understood the whole “journey” of the rating: from when it is given by the user until it is converted into a score that the driver receives.

We became aware of a key problem for our drivers: they do not know how to decode their score. A generic number (4,8 e.g.) does not help them understand what they are doing right and wrong, and it frustrates them not knowing what they can do better to improve their work.

Step 3: Analyzing the results

After 4 weeks of the project, we had to dig into the analysis process, and one more time we had to choose how to approach it:

-

OPTION A — “Since we have done interviews and focus groups with both users and drivers we can now analyze each target and create two independent reports to attend their different needs.”

-

OPTION B — “Now that we have integrated both targets in the research, we should analyze the information altogether to get a holistic view of their needs and expectations.”

Again, we opted for the second one, because only this way we could reach the full potential of the analysis, making sure that the needs of the drivers were taken into account for the users and vice-versa.

Through this step, it became clear that our rating was not as easy to use as intended. While the question we asked our users seemed relatively simple -“Marta, please rate your driver”- we realized that it was complex to answer because our users did not differentiate between aspects related to the driver, to the service or to the app. For this reason, they could act in many different ways: not doing a rating, making a general one based on a mix of experience with driver, service and app (this one was especially difficult when contradictory elements were present in the same trip, i.e. a good driver but problems with the app), or they could even decide to give a high score as a standard to avoid creating problems for the driver.

Our drivers also felt that something was off with the system. In their perception, they were taking the blame for events that they were not responsible for.

In total, we had a rating system that neither worked well for the users, for the drivers or for Cabify: the system was broken.

Step 4: Sharing the results

In the last phase of the project, we had to choose the model we felt was the most adequate for communicating the results:

-

OPTION A — “We could gather the core product team who had requested the research and who were going to work on the redesign. If anybody else was interested in the results they could read the report or ask for a presentation”.

-

OPTION B — “We could go beyond that and also involve all core teams outside of the product department that we thought could somehow be affected by this new knowledge”.

We chose the latter because it became clear during the process that the results pointed towards doing a complete redesign of the rating system. It needed to be a team effort to create a new system that made sense both for the users, the drivers and for Cabify.

In this final step, we became ambassadors of the results. As researchers, we think it is important to strive to make what we have discovered in our projects matter in the whole organization.

And what was the outcome of this user-centric approach?

It led to the implementation of a new rating system that put the users of our app in the center of the solution. Instead of basing it on an evaluation of elements that were “external” to the users as before (driver, service or app), it was now centered around their own experience. In short, the question was turned around to point on the user:

-

From: “Marta, please rate your driver”

-

To: “How was your trip?”

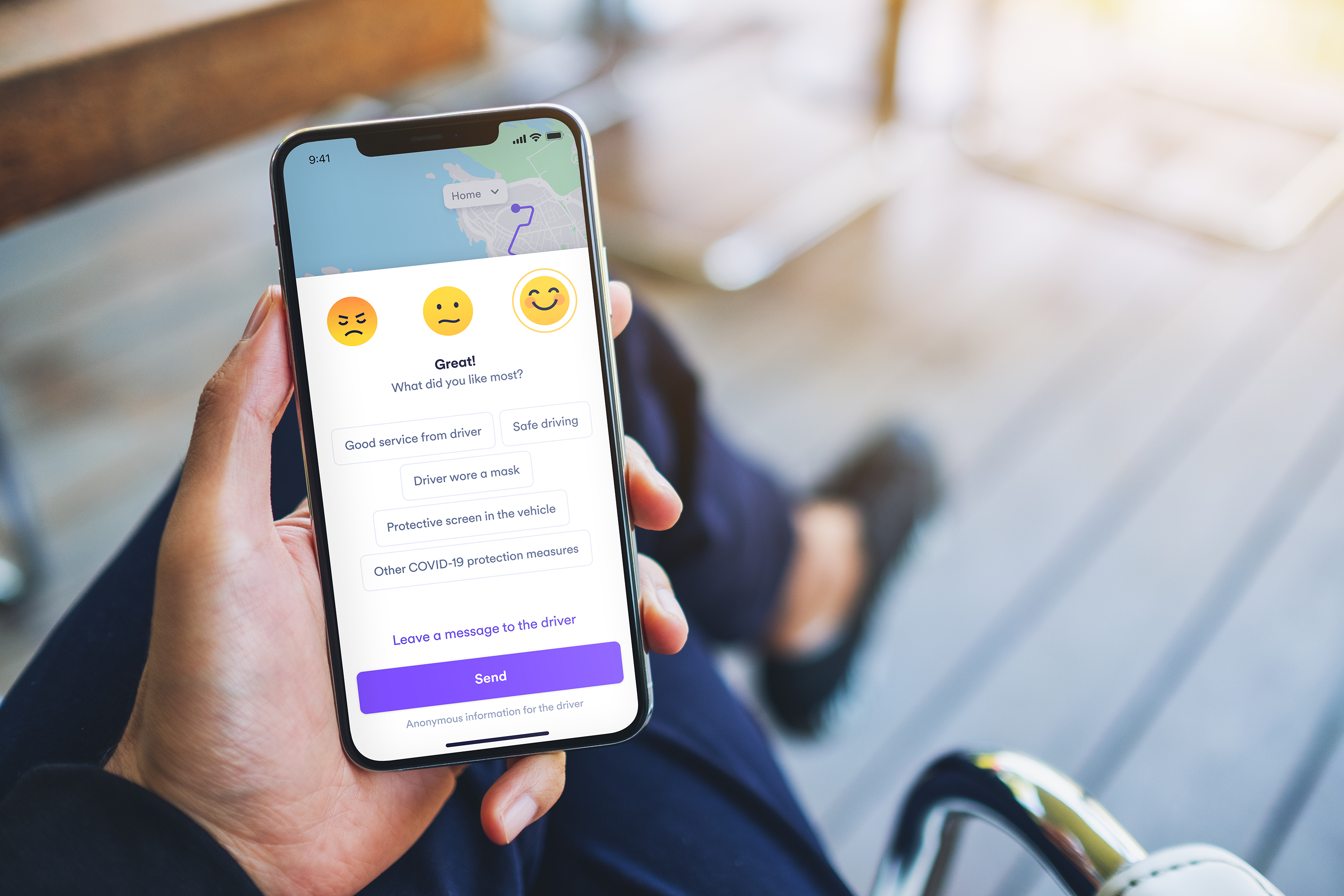

The idea was that everybody could answer how they felt using the service and the ambiguous stars were replaced with emoticons (yes, emotions) and the possibility to choose specific elements they had liked or wanted to improve.

This small-big twist facilitates the user to give the information but also helps Cabify and the driver to understand the users’ needs. Besides, as criteria for success, this change has already increased the number of ratings with 35% improvement over the baseline.

Final notes, we are lucky researchers

What we wanted to transmit with this article is that we feel fortunate to work in a place that provides us with the right combination of conditions to allow us this approach:

-

As we work in-house, we have the liberty to propose topics to include in the same research with the objective to increase the general knowledge of the company.

-

We rarely work with a fixed deadline so we have the opportunity to continue with the project until we consider the result useful to create impact.

-

And last but not least, we are lucky researchers because we work with a team who always opts for option B.

Written by Itsaso Mediavilla and Lærke Gry Hinrichsen

Did you feel identified with this text? Check out opportunities to join the Cabify team here.

We are hiring!

Join our team and help us transform our cities with sustainable mobility.

Check out the open positions we have in .